It consists of a set of tools allowing everyone to create their own vector map tiles from OpenStreetMap data for hosting, self-hosting, or offline use.

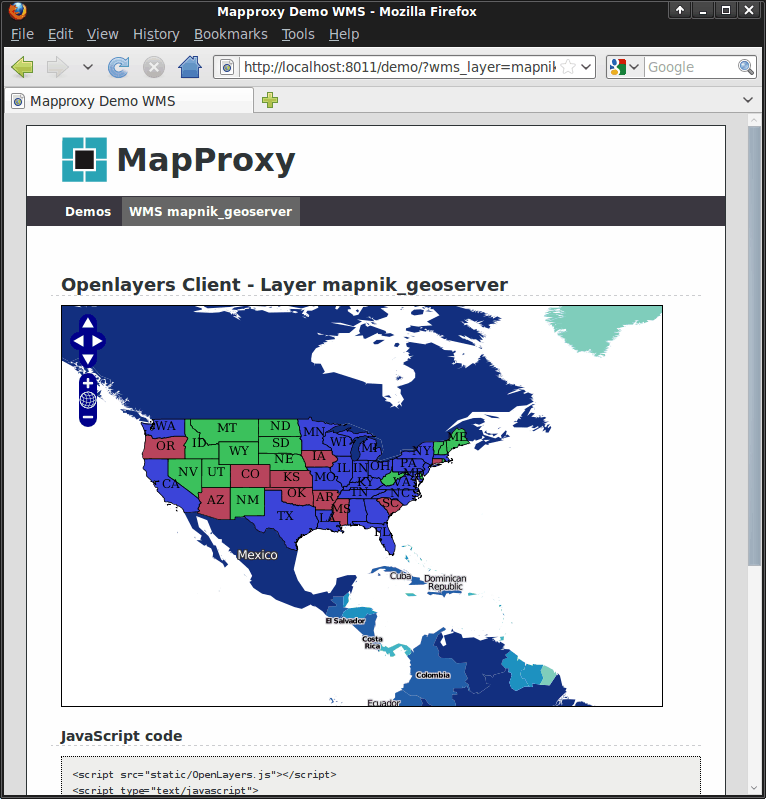

I'm sure there is a lot of things I could do differently, so I'm basically looking for tips on how to set up a fast an effective workflow, that is scaleable.Ĭan I for example run gdal2tiles on multiple source images instead of the 1 large GeoTIFF since the smaller GeoTIFF was a lot faster.J'ai généré les fichiers mbtiles à partir des metatuiles produites par à l'aide 2 deux petits outils: - meta2tile : un petit utilitaire faisant partie de mod_tile qui permet de convertir les metatile utilisées par mod_tile en tuiles PNG normales OpenMapTiles is an open-source project aiming to create world maps from open data. I ran the samme command on a smaller GeoTIFF (1m instead of 10cm) and it was very fast. Now this command is very slow (12+ hours) on the 10cm GeoTIFF. Here is an example of my command: gdal2tiles -zoom=15-19 - processes=8 "tiff path" "output folder"

This is a very slow process (48 hours~)Ĥ: Run gdal2tiles from OSGeo4W64 Shell commandline (I followed this guide: ) As an example my current GeoTIFF is 12,5GB. Previosly we used Global Mapper, but we we did not find a good solution to exporting different zoom levels that works with KML (Google Earth).ġ: Import source data (aerial photos and height data mostly, but my questions here is realted to aerial raster photos) into Global Mapperģ: Export 1 large GeoTIFF (BigTIFF) at a high resolution (10cm). We are currently experimenting with using gdal2tiles to export raster images of different areas. My workflow might seem total bonkers to someone who actually has any experience with this type of work. First of, I am not a coder (I'm a 3D artist), and rather new to GIS workflows related to georeferenced data, so please bear with me if there are some obvious stuff i'm missing, wrong terminology or anything like that.

0 kommentar(er)

0 kommentar(er)